使用NLP创建摘要( 二 )

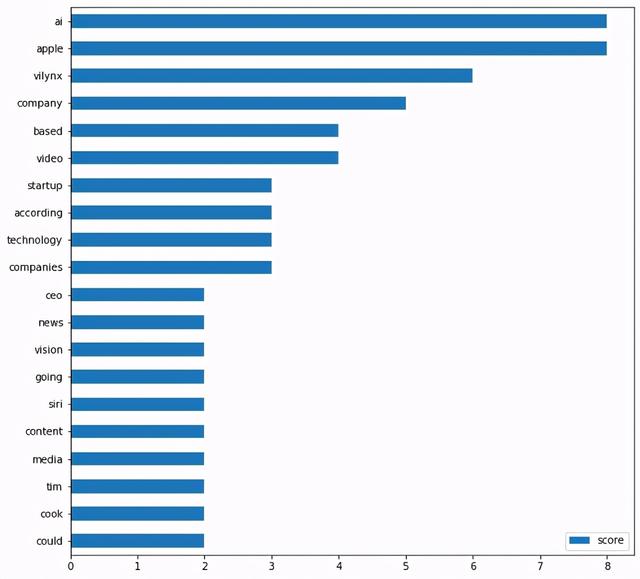

让我们把它转换成横条图 , 只显示前20个单词 , 下面有一个helper函数 。

# helper 函数 , 用于绘制最上面的单词 。 def plot_top_words(word_count_dict, show_top_n=20):word_count_table = pd.DataFrame.from_dict(word_count_dict, orient = 'index').rename(columns={0: 'score'})word_count_table.sort_values(by='score').tail(show_top_n).plot(kind='barh', figsize=(10,10))plt.show()让我们展示前20个单词 。

plot_top_words(word_count, 20) 文章插图

文章插图

从上面的图中 , 我们可以看到“ai”和“apple”两个词出现在顶部 。 这是有道理的 , 因为这篇文章是关于苹果收购一家人工智能初创公司的 。

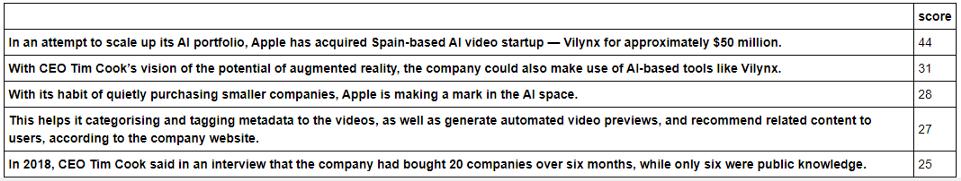

(7) 根据分数排列句子现在 , 我们将根据句子得分对每个句子的重要性进行排序 。 我们将:

- 删除超过30个单词的句子 , 认识到长句未必总是有意义的;

- 然后 , 从构成句子的每个单词中加上分数 , 形成句子分数 。

注意:根据我的经验 , 任何25到30个单词都可以给你一个很好的总结 。

# 创建空字典来存储句子分数sentence_score = {}# 循环通过标记化的句子 , 只取少于30个单词的句子 , 然后加上单词分数来形成句子分数for sentence in sentences:# 检查句子中的单词是否在字数字典中for word in nltk.word_tokenize(sentence.lower()):if word in word_count.keys():# 只接受少于30个单词的句子if len(sentence.split(' ')) < 30:# 把单词分数加到句子分数上if sentence not in sentence_score.keys():sentence_score[sentence] = word_count[word]else:sentence_score[sentence] += word_count[word]我们将句子-分数字典转换成一个数据框 , 并显示sentence_score 。注意:字典不允许根据分数对句子进行排序 , 因此需要将字典中存储的数据转换为DataFrame 。

df_sentence_score = pd.DataFrame.from_dict(sentence_score, orient = 'index').rename(columns={0: 'score'})df_sentence_score.sort_values(by='score', ascending = False) 文章插图

文章插图(8) 选择前面的句子作为摘要我们使用堆队列算法来选择前3个句子 , 并将它们存储在best_quences变量中 。

通常3-5句话就足够了 。 根据文档的长度 , 可以随意更改要显示的最上面的句子数 。

在本例中 , 我选择了3 , 因为我们的文本相对较短 。

# 展示最好的三句话作为总结best_sentences = heapq.nlargest(3, sentence_score, key=sentence_score.get)让我们使用print和for loop函数显示摘要文本 。print('SUMMARY')print('------------------------')# 根据原文中的句子顺序显示最上面的句子for sentence in sentences:if sentence in best_sentences:print (sentence)这是到我的Github的链接以获取Jupyter笔记本 。 你还将找到一个可执行的Python文件 , 你可以立即使用它来总结你的文本:让我们看看算法的实际操作!以下是一篇题为“苹果以5000万美元收购人工智能创业公司(Apple Acquire AI Startup)以推进其应用程序”的新闻文章的原文

In an attempt to scale up its AI portfolio, Apple has acquired Spain-based AI video startup — Vilynx for approximately $50 million.Reported by Bloomberg, the AI startup — Vilynx is headquartered in Barcelona, which is known to build software using computer vision to analyse a video’s visual, text, and audio content with the goal of “understanding” what’s in the video. This helps it categorising and tagging metadata to the videos, as well as generate automated video previews, and recommend related content to users, according to the company website.Apple told the media that the company typically acquires smaller technology companies from time to time, and with the recent buy, the company could potentially use Vilynx’s technology to help improve a variety of apps. According to the media, Siri, search, Photos, and other apps that rely on Apple are possible candidates as are Apple TV, Music, News, to name a few that are going to be revolutionised with Vilynx’s technology.With CEO Tim Cook’s vision of the potential of augmented reality, the company could also make use of AI-based tools like Vilynx.The purchase will also advance Apple’s AI expertise, adding up to 50 engineers and data scientists joining from Vilynx, and the startup is going to become one of Apple’s key AI research hubs in Europe, according to the news.Apple has made significant progress in the space of artificial intelligence over the past few months, with this purchase of UK-based Spectral Edge last December, Seattle-based Xnor.ai for $200 million and Voysis and Inductiv to help it improve Siri. With its habit of quietly purchasing smaller companies, Apple is making a mark in the AI space. In 2018, CEO Tim Cook said in an interview that the company had bought 20 companies over six months, while only six were public knowledge.

- 会员|美容院使用会员管理软件给顾客更好的消费体验!

- 桌面|日常使用的软件及网站分享 篇一:几个动态壁纸软件和静态壁纸网站:助你美化你的桌面

- QuestMobile|QuestMobile:百度智能小程序月人均使用个数达9.6个

- 轻松|使用 GIMP 轻松地设置图片透明度

- 电池容量|Windows 自带功能查看笔记本电脑电池使用情况,你的容量还好吗?

- 设置页面|QQ突然更新,加入了一项新功能,可以让你创建一个独一无二的QQID

- 撕破脸|使用华为设备就罚款87万,英政府果真要和中国“撕破脸”?

- 冲突|智能互联汽车:通过数据托管模式解决数据使用方面的冲突

- 鼓励|(经济)商务部:鼓励引导商务领域减少使用塑料袋等一次性塑料制品

- 机身|轻松使用一整天,OPPO K7x给你不断电体验